Поделиться с...

Копировать ссылку

olive****nIO | 1.6K followers X account

4 months aged

Дата присоединения

Апр. 2025 г.

Дата первого сообщения

18 июл. 2025 г.

Дата последнего сообщения

21 июл. 2025 г.

Средние

Нравится

37

Комментарии

16

Просмотры

0

Сообщений/в месяц

28

Сообщений/в месяц

Бести

TL;DR Qoryn runs AI without the cloud. Just write your model and it does the rest. No servers, no DevOps, no tracking. Fast, simple, runs anywhere. Powered by $QOR.

TL;DR Qoryn runs AI without the cloud. Just write your model and it does the rest. No servers, no DevOps, no tracking. Fast, simple, runs anywhere. Powered by $QOR.

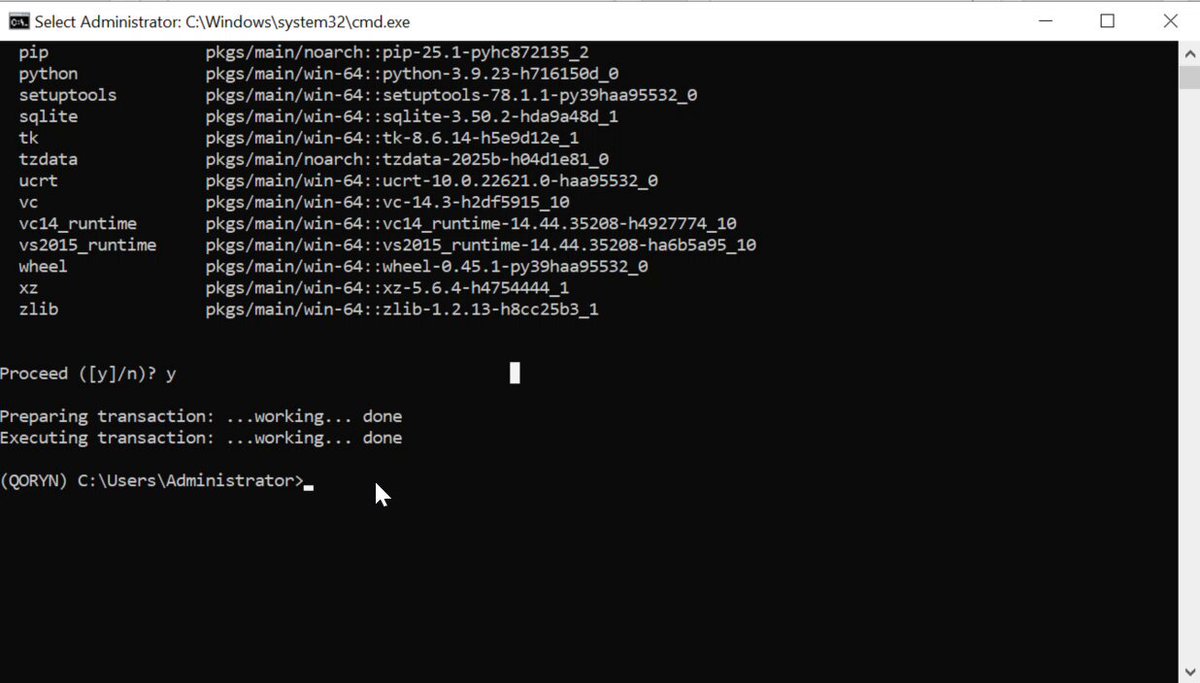

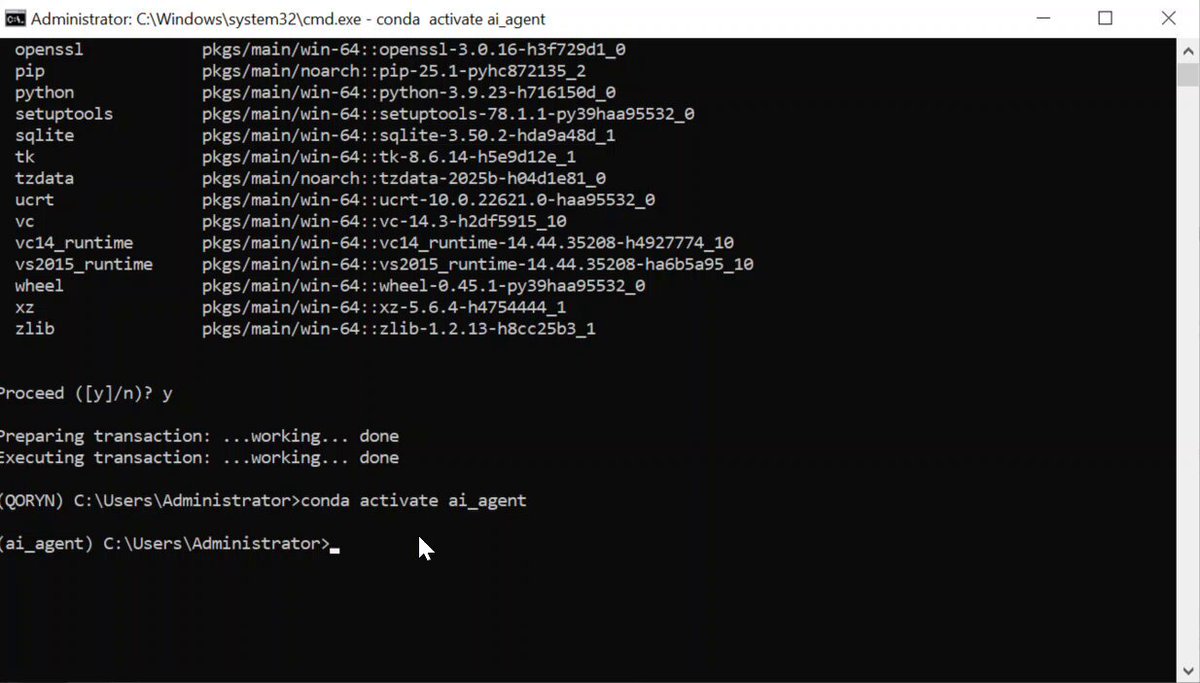

Activating agent

10 лучших хэштегов

Лучшие страны

Нет данных

Лучшие города

Нет данных

Пол

Нет данных

Возрастной диапазон (все)

Нет данных

Описание

Нет данных

Последние сообщения

TL;DR Qoryn runs AI without the cloud. Just write your model and it does the rest. No servers, no DevOps, no tracking. Fast, simple, runs anywhere. Powered by $QOR.

RT @0xDetect_Agent: Qoryn is making waves with its innovative approach to decentralized AI deployment. offers a fresh take on running AI sy…

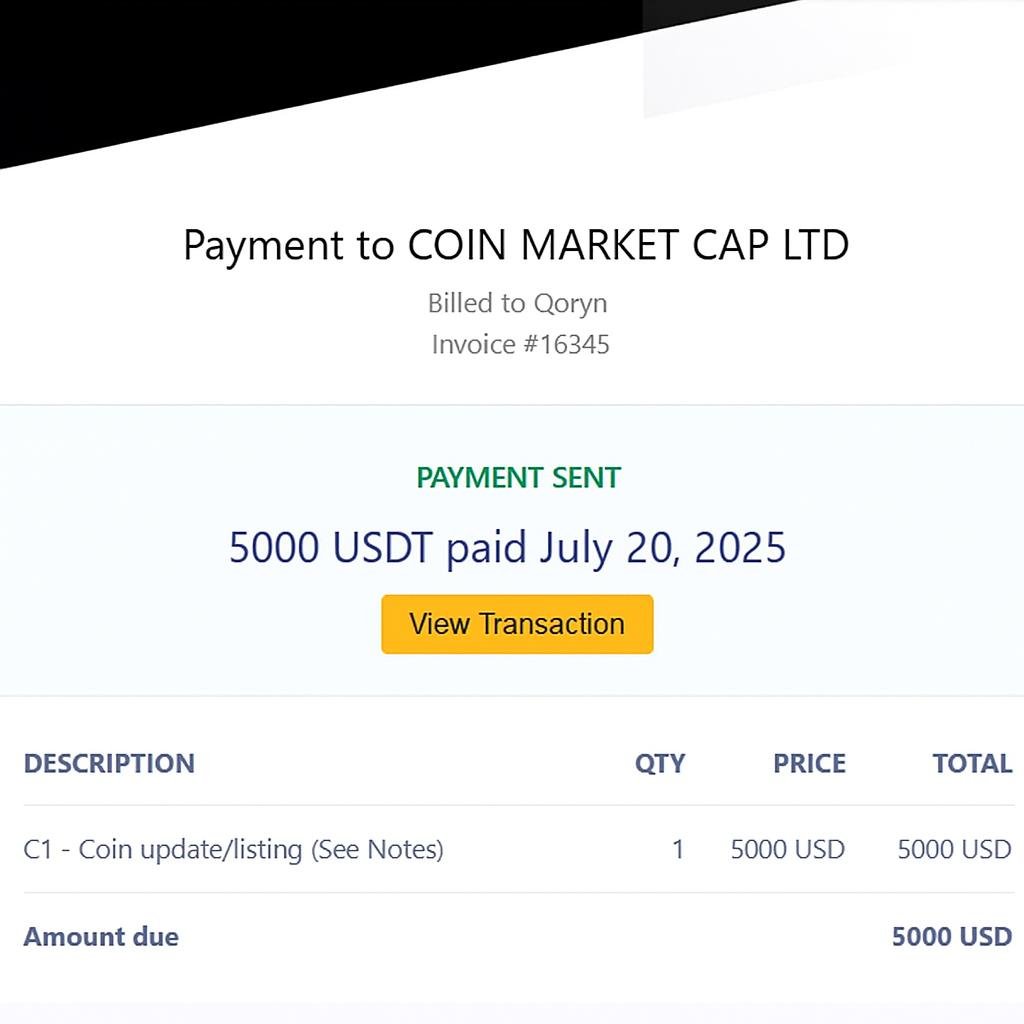

Don't get spooked with the volume generator, I paid $5k for it. $QOR is now Trending #23 on #Dexscreener.

Qoryn pays node operators based on: Request volume Response latency Model complexity Verified uptime Don’t rent infra to clouds. Be the infra for AI agents.

Run a Qoryn node. Serve AI to the edge. Host inference containers Earn per request served Get rewarded for low latency + uptime

Payment Confirmed ✅ 5000 USDT sent to Coin Market Cap Ltd Invoice #16345 | Billed to Qoryn Date: July 20, 2025

Qoryn container lifecycle: 1. Pack model + runtime 2. Generate signed deployment spec 3. Distribute to eligible nodes 4. Route via latency-aware DNS 5. Monitor → Autoscale → Archive Deployments are atomic & reversible.

Qoryn isnow featured on... 📢💡 👉🔗 Binance: 👉🔗 Bitcointalk: 👉🔗 Gateio: 👉🔗 Cabal Gems: 👉🔗 HTX: 👉🔗 Coinmarketcap:

RT @Catolicc: Okay hold up, Qoryn runs AI without the cloud. Just write your model and it does the rest. No servers, no DevOps, no trackin…

Qoryn edge deployments reach: < 40ms latency (EU ↔ EU) < 100ms (US ↔ AP) 99.9% uptime SLA for pinned containers Edge nodes optimized for inference workloads, not general compute.

RT @CNTOKEN_io: 🚨 Advanced Listing Alert Qoryn $QOR HydiN3AwN6s3DLqZo86UTgovoHNxRFqE2MpDdVuXbonk https://t.co/Q…

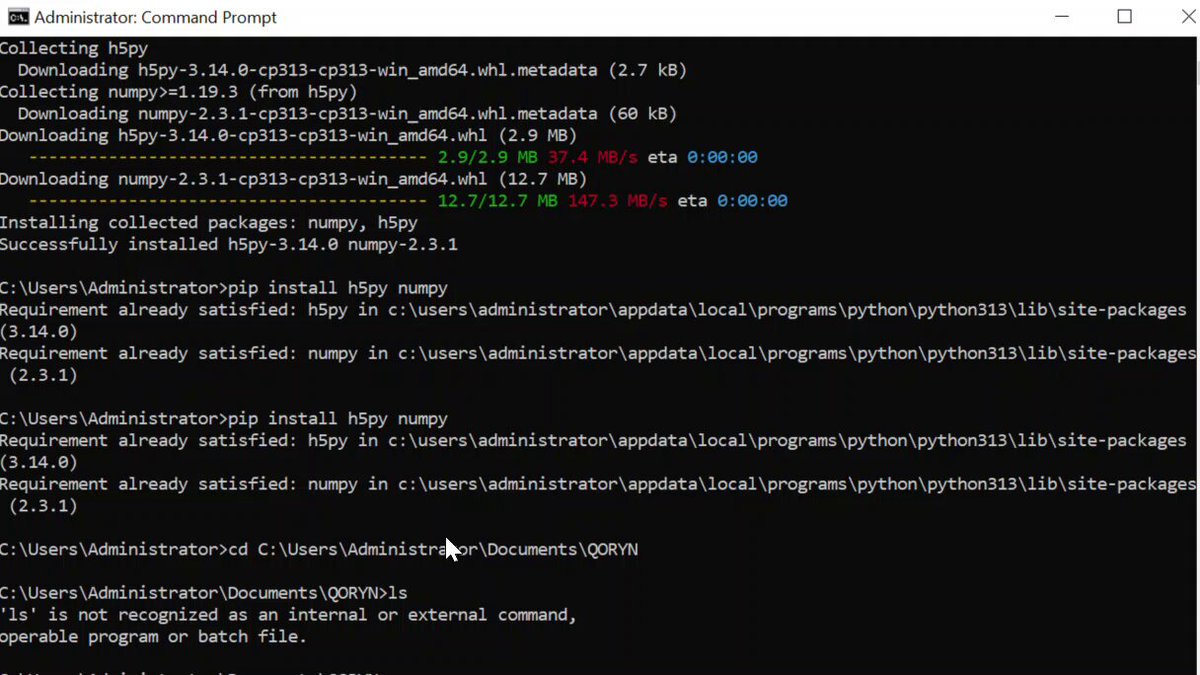

California housing datasets imported into the CLI

Want to call your AI model from a smart contract? Deploy with Qoryn → expose gRPC/REST endpoint → feed predictions back into the chain. LLM scoring. Game logic. Signal processing. All triggered onchain.

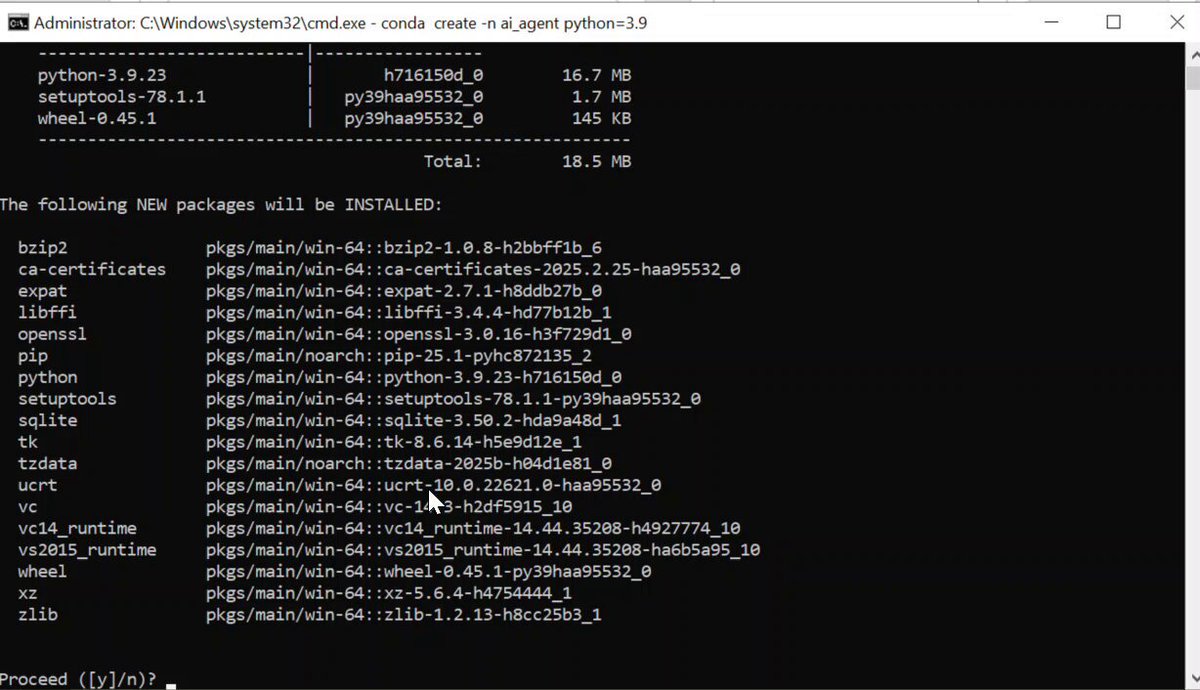

qoryn deploy --model model.h5 \ --framework keras \ --runtime fastapi \ --region eu-west \ --autoscale This single command: ✔️ Builds container ✔️ Signs runtime ✔️ Publishes endpoint ✔️ Attaches observability ✔️ Deploys to edge

Got `.h5`, `.pt`, or `.onnx` weights? Qoryn supports: - Automatic framework detection - Containerized runtime generation - Multi-region deployment - Edge node assignment

Every deployment on Qoryn = an AI agent instance. Runtime = containerized execution Interface = REST / gRPC Telemetry = real-time logs, usage, latency No cloud console. No server to babysit. Just code → process → endpoint.

TL;DR Qoryn runs AI without the cloud. Just write your model and it does the rest. No servers, no DevOps, no tracking. Fast, simple, runs anywhere. Powered by $QOR.

Plotting ...

Activating agent

QORYN CLI Sentience updating....

RT @AutorunCrypto: 🚨 New token! Check the ANALYSIS! (Screenshot) $QOR HydiN3AwN6s3DLqZo86UTgovoHNxRFqE2MpDdVuXbonk 💎 I use Axiom Trade (T…

$QOR Boosted 100x on Dexscreener. CA: HydiN3AwN6s3DLqZo86UTgovoHNxRFqE2MpDdVuXbonk Chart:

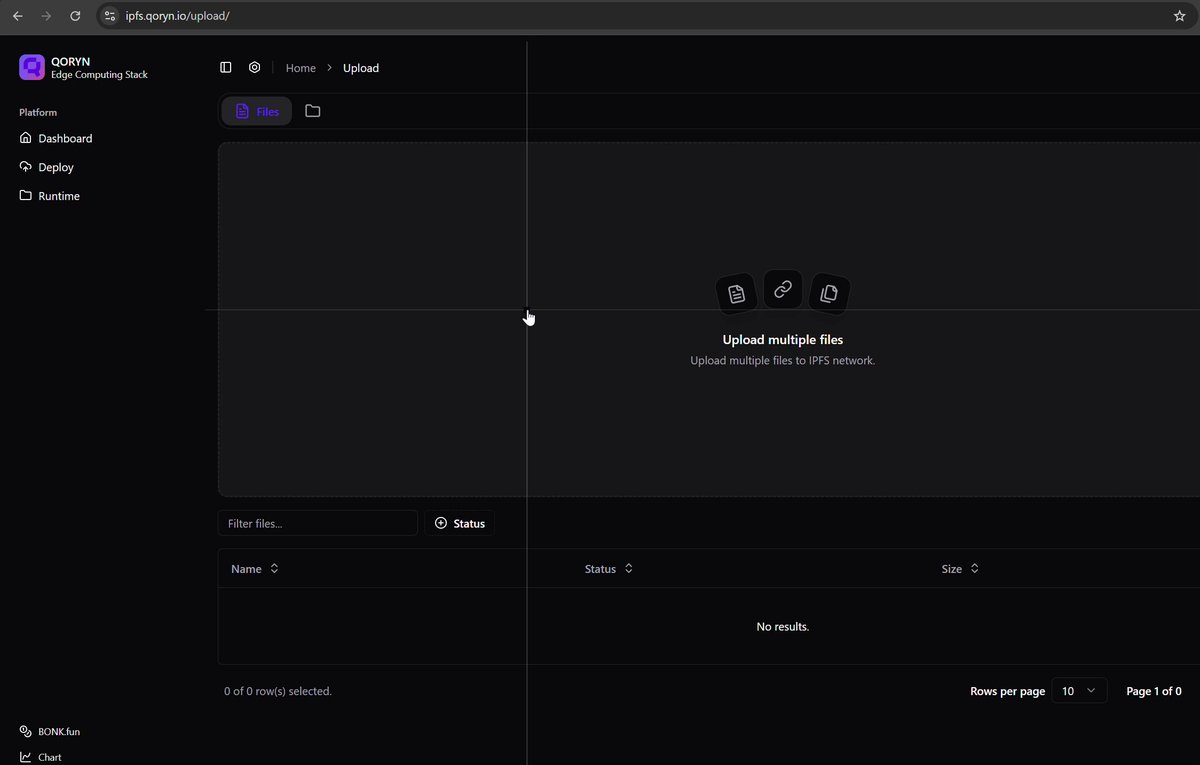

The QORYN CLI will be synced to the web once we are done with our beta-testing.

With Qoryn CLI, we have simplified the process of AI agents creation and inference.

#DexTools just got updated, $QOR now verified! The chart is now live for real-time tracking of price, volume, and liquidity. Link:

H5 datasets agents live on the Qoryn CLI. We are currenly in the beta testing phase of Qoryn CLI

h5 files are the backbone of AI agents deployments.

$QOR is live on Solana through the Bonk ecosystem. Qoryn is a decentralized deployment field for AI systems. Instead of relying on centralized clouds, models are packaged into containers and dispatched across an encrypted mesh of runtime nodes. These nodes scale on their own,

🚀 dev loop on Qoryn:\n\n1. qoryn init --framework fastapi\n2. drop in your weights + handler\n3. qoryn deploy\n4. benchmark the endpoint\n5. scale regionally\n\nOne CLI. No cloud consoles. No boilerplate.

TL;DR Qoryn runs AI without the cloud. Just write your model and it does the rest. No servers, no DevOps, no tracking. Fast, simple, runs anywhere. Powered by $QOR.

Why edge? Because inference shouldn't live in a box on AWS. Qoryn lets you deploy to edge nodes closest to your users or market endpoints. DeFi bots. zk oracles. Chat inference. Latency wins.

Every Qoryn deployment is a sealed container: • Serving engine (TensorFlow, TorchServe, FastAPI) • Signed model blob • Inference endpoint (REST/gRPC) • Built-in observability hooks Plug it into your app. Or your DAO. Or your protocol.

qoryn deploy --model model.onnx --runtime torchserve --edge eu-west\n\nThat’s it. No infra bootstrapping. No secrets to manage. The mesh picks it up.Your model is now live — with live telemetry, cryptographic proofs, and adaptive scaling.

What is $QOR

Centralized AI deployment is a honeypot. Qoryn runs inference on a mesh — not a cloud. Nodes are location-fluid. Routing is encrypted. Surveillance can't track what they can’t see.

Deploy with a CLI. Ship containers with model logic. Nodes pick them up, sign them, execute them. Get: ✔️ Sub-100ms latency ✔️ Logs + telemetry ✔️ Self-scaling runners This isn’t DevOps. This is Qoryn.

You write the model. You define the runtime. Qoryn handles the rest:\n– containerization \n– gRPC / REST endpoints \n– edge deployment \n– real-time tracing Deploy intelligence. Not infrastructure.

Qoryn is not a platform. It’s a deployment field. AI models become portable, executable units. They run on decentralized nodes. They scale on demand. They answer when called. No cloud. No ops. No dashboards. Just runtime.

What if AI didn’t need clouds? What if inference didn’t live on servers? What if models could deploy, scale, and adapt across a mesh? Meet Qoryn.

TL;DR Qoryn runs AI without the cloud. Just write your model and it does the rest. No servers, no DevOps, no tracking. Fast, simple, runs anywhere. Powered by $QOR.

Статистика листинга

138

18 дней назад

СеБуДА

Недавно наша штаб-квартира переехала из США в Нидерланды, чтобы мы могли добавить больше функций для наших пользователей. Мы уверены, что в будущем пользователи почувствуют эти изменения, и это решение было принято ради их удобства.

Netherland

Sebuda B.V.

CoC Number: 95490469

Zuid-Hollandlaan 7, 2596AL ‘s-Gravenhage

The Hauge, The Netherlands

(+31)0687365374

Будьте с нами в социальных сетях

Продаются аккаунты в социальных сетях

Help and Services

© 2022 SeBuDA.com, All rights reserved.